Strategies, governance, and the rules you need to know to improve data quality (2026)

Data quality is an essential element in your data governance strategy.

This means taking the time to develop quality rules to use optimal data that teams will trust.

Here are our top five tips for creating standard rules for reliable data.

TL;DR (Summary)

Data quality is the cornerstone of any modern data governance and AI governance strategy. High-quality data allows organizations to make informed decisions, accelerate AI adoption, and reduce operational risk.

This guide walks through the essential pillars of a successful data quality program—including governance frameworks, data catalogs, metadata management, and quality rules—and explains how to implement them step by step.

It also shows why a Data & AI Product Governance platform like DataGalaxy is the most effective way to operationalize data quality at scale.

What is data quality?

Data quality refers to the degree to which data is accurate, complete, consistent, timely, and reliable enough to support an organization’s operational, analytical, and AI-driven use cases.

High-quality data correctly represents the real-world entities and processes it describes, allowing teams to make confident decisions, build trustworthy AI models, and ensure compliance with internal policies and external regulations.

In modern data governance, data quality is evaluated across several key dimensions—such as accuracy, completeness, validity, consistency, uniqueness, timeliness, and integrity.

When these dimensions are monitored and managed effectively, organizations can reduce risk, eliminate inefficiencies, and drive measurable business value from their data assets.

Put simply: data quality is the foundation that ensures your data—and all the insights, analytics, and AI that depend on it—can be trusted.

Why is data quality important?

In today’s AI-driven landscape, organizations no longer view data as just a byproduct of operations. Data is now a strategic asset, powering analytics, machine learning models, automation, and product innovation. Poor-quality data leads to poor-quality outcomes—misguided decisions, compliance failures, revenue loss, and distrust across teams.

To navigate this complexity, enterprises must build continuous, scalable data quality programs supported by strong governance practices, automation, and cross-functional collaboration.

This article provides a modern, comprehensive roadmap to improving data quality, aligned with DataGalaxy’s approach to data & AI product governance.

How to improve data quality in 8 steps (2026)

1. Understand your data landscape

Understanding your data environment is the foundation of all data quality initiatives.

Before you can improve data, you must understand its origin, flow, transformations, usage, and risks.

Key aspects of a complete data landscape assessment

Understanding the landscape involves asking:

- Where does the data originate? (internal systems, SaaS tools, third-party providers)

- How does it move between systems? (ETL pipelines, APIs, integrations)

- What transformations occur along the way? (calculations, merges, enrichments)

- Who uses the data? (data analysts, business users, AI models)

- Where does data quality degrade today? (manual entry, legacy tools, inconsistent processes)

This mapping, often formalized through data lineage, reveals hidden complexity and root causes of quality issues.

Why this matters in modern organizations

Modern data ecosystems are no longer linear. They are interconnected, multi-source, and dynamic. Without full visibility:

- AI models are trained on unreliable data.

- Duplicate or conflicting sources appear.

- Errors propagate across systems.

- Teams lose trust in dashboards and reports.

Recommended tools & practices

- Enterprise data lineage (automatic + manual)

- Business process mapping

- Source-to-target documentation

- Data usage monitoring

- Stakeholder interviews with business units

Operationalizing

CDEs

Do you know how to make critical data elements (CDEs) work for your teams?

Get your go-to guide to identifying and governing critical

data elements to accelerate data value.

2. Implement a strong data governance framework

Data governance is the operational backbone of data quality.

Governance frameworks define roles, responsibilities, decision rights, processes, and standards that ensure consistent and trusted data across the company.

Key governance roles (entities)

- Data Owners: Accountable for data assets and business meaning

- Data Stewards: Operational guardians of data quality

- Data Consumers: Employees who use data to make decisions

- Data Engineers: Technical owners of pipelines and transformations

Data governance elements that directly improve data quality

- Clear ownership of datasets

- Standard definitions and business glossaries

- Data access controls and compliance rules

- Defined data quality SLAs and KPIs

- Processes for issue resolution and escalation

Strong governance ensures that everyone knows their role, decisions are aligned, and no data assets fall through organizational cracks.

3. Use a data catalog for transparency & discovery

A data catalog is essential for improving data quality because it brings clarity, transparency, and discoverability to data assets.

Benefits of using a modern data catalog

A data catalog allows teams to:

- Identify and understand all available datasets

- Access consistent, approved sources

- View automated data lineage to validate trust

- Understand business definitions and owners

- Assess data quality status and rules in one place

Modern catalogs (like DataGalaxy) go further with AI-assisted recommendations, automated tagging, semantic search, and contextual knowledge graphs.

Why this matters for data quality

When employees cannot find or understand data, they create copies or rely on outdated sources.

A data catalog eliminates ambiguity and accelerates the adoption of high-quality data.

Build trust & context through a collaborative catalog

By automatically mapping metadata, lineage, and ownership, the Catalog becomes the central semantic layer that keeps information consistent, connected, and always up to date.

This foundation helps people and AI work with the same trusted definitions, understand context and impact, and deliver faster, better decisions across the business.

Discover DataGalaxy4. Invest in a metadata management tool

Metadata management brings structure and intelligence to your entire data ecosystem.

It provides contextual information that helps organizations ensure consistency, accuracy, and traceability.

Types of metadata relevant to data quality

- Technical metadata: Schemas, data types, sources

- Business metadata: Definitions, descriptions, owners

- Operational metadata: Lineage, logs, pipeline runs

- Social metadata: Who uses the data and how often

Benefits of metadata management tools

Metadata tools help organizations:

- Automatically catalog and classify data assets

- Identify redundant or conflicting sources

- Detect anomalies earlier through metadata patterns

- Maintain a “single version of the truth”

- Improve AI model governance with traceable training data

5. Validate data at the point of entry

Many quality issues arise at the moment data is entered, especially in manual processes, customer-facing platforms, or legacy systems.

Examples of entry-point validation

- Mandatory field completeness

- Format and type checks

- Referential integrity validations

- Duplicate detection

- Automated rules for sensitive data

- Review workflows for exceptions

Fixing data at the source is significantly cheaper than correcting errors later in the lifecycle.

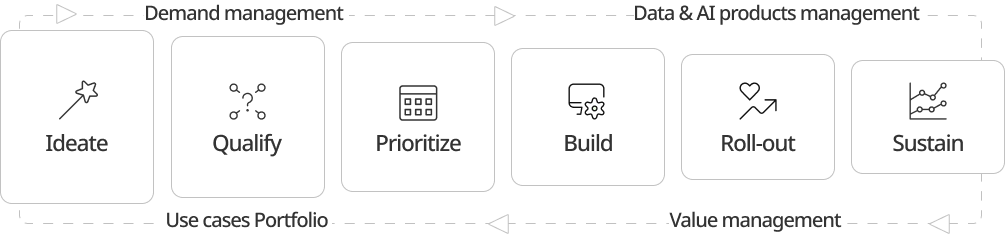

Designing data & AI products that deliver business value

To truly derive value from AI, it’s not enough to just have the technology.

- Clear strategy

- Reasonable rules for managing data

- Focus on building useful data products

6. Conduct regular audits & quality reviews

Data deteriorates over time. Values become obsolete, contexts change, and systems evolve. Regular audits are necessary to maintain long-term reliability.

What audits should check for

- Completeness of critical fields

- Accuracy against authoritative sources

- Consistency between systems

- Anomalies or unusual patterns

- Redundant data

- Compliance with quality rules

Audits support a culture of continuous improvement and ongoing governance.

7. Educate & train your teams

Data quality is not just a technical problem. It is a cultural initiative.

Training topics to include

- Why data quality matters

- How to use the data catalog

- Proper data entry techniques

- How to interpret lineage and quality indicators

- How to report issues

- Data governance policies and roles

Well-trained teams make fewer mistakes and take greater ownership of data reliability.

8. Proactively monitor & address data quality issues

A proactive stance ensures that organizations detect and correct issues before they escalate.

Proactive data quality practices

- Continuous monitoring of quality KPIs

- Observability dashboards

- DQ anomaly detection powered by AI

- Alerts and automated workflows

- Root-cause analysis

- Quality scoring for datasets

Proactivity builds resilience and enables teams to trust data even as systems grow more complex.

The 3 KPIs for driving real data governance value

KPIs only matter if you track them. Move from governance in theory to governance that delivers.

Download the free guideHow to create effective data quality rules

Data quality rules transform governance principles into operational, enforceable logic that can be monitored and automated.

What do data quality rules do?

- Define how data should look (format, values, ranges)

- Establish relationships that must always be respected

- Provide standardized metrics for measuring data quality

Rules make quality measurable and repeatable.

Involve managers from multiple departments

Each department has different priorities, operational processes, and quality expectations.

Co-creating rules with business stakeholders ensures:

- Better adoption

- Clearer definitions

- Stronger alignment with business outcomes

- Shared ownership of data quality

Create a reasonable number of rules

Too many rules make systems slow and teams frustrated. Too few rules lead to unreliable data.

Focus first on:

- Critical data elements (CDEs)

- Regulatory or compliance-driven fields

- AI training datasets

- Business-impacting KPIs

Start small, iterate progressively.

Adopt a step-by-step approach

Do not attempt to govern everything at once.

Recommended sequence

- Identify critical data elements (CDEs)

- Define essential rules

- Implement monitoring

- Evaluate impact and refine

- Expand scope

This iterative approach mirrors best practices in modern data product governance.

Write rules based on data types & importance

Not all data deserves the same scrutiny.

Example: Employee data

| Field | Criticality | Required rules |

|---|---|---|

| Employee Full Name | High | Completeness, uniqueness, format |

| Employee Phone Number | Medium | Format, accuracy |

| Department Code | High | Valid reference values, consistency |

Clear rules ensure consistent quality and avoid ambiguity.

Spot issues before they spread

Track, assess, and act on data quality directly inside DataGalaxy’s quality monitoring. Define what “good” looks like, assign responsibilities, and monitor issues in context, where governance already happens.

DataGalaxy’s quality monitoringCentralized vs. decentralized rule storage

Enterprises must choose how they organize rules:

Centralized storage

- One rule for all departments

- Higher consistency

- Easier governance

- Ideal for regulated industries

Decentralized storage

- Flexibility for business units

- Adapted to local processes

- Useful for global or multi-brand organizations

Many organizations adopt a hybrid approach using federated governance.

DataGalaxy for improving & sustaining data quality (2026)

DataGalaxy is the industry’s leading Data & AI Product Governance platform, designed to help organizations operationalize data quality at scale with automation, collaboration, and visibility.

Key capabilities for data quality excellence

Centralized Data Catalog

Helps teams find, understand, and trust data, with automated metadata ingestion and a knowledge graph-based visualization.

Enterprise metadata management

Automatically documents technical, business, and operational metadata to improve consistency and traceability.

End-to-end data lineage

Tracks data from source to consumption, ensuring transparency for analytics and AI use cases.

Data quality scoring & monitoring

Measure, enforce, and visualize quality levels directly from the platform.

Collaborative governance workspace

Break silos by enabling data owners, stewards, and business teams to work together on rules and policies.

AI product governance

Ensure that training datasets, features, and model outputs are reliable, traceable, and compliant.

CDO Masterclass: Upgrade your data leadership in just 3 days

Join DataGalaxy’s CDO Masterclass to gain actionable strategies, learn from global leaders like Airbus and LVMH, and earn an industry-recognized certification.

Save your seat!DataGalaxy allows organizations to transform data quality from a technical task into a strategic business capability.

The right data quality rules can make all the difference in the world. If you are wondering how to get started, don’t worry: There’s no need to reinvent the wheel.

If a good set of rules already exists within your organization, find out who’s in charge of maintaining it and ask them for advice. If you don’t have a formal set of rules yet, that’s okay too.

Your company’s data is unique and probably warrants an approach tailored to its needs. Find out what makes the most sense for your organization and start implementing it today!

FAQ

- How do I start a data governance program?

-

To launch a data governance program, identify key stakeholders, set clear goals, and define ownership and policies. Align business and IT to ensure data quality, compliance, and value. Research best practices and frameworks to build a strong, effective governance structure.

- How do I implement data governance?

-

To implement data governance, start by defining clear goals and scope. Assign roles like data owners and stewards, and create policies for access, privacy, and quality. Use tools like data catalogs and metadata platforms to automate enforcement, track lineage, and ensure visibility and control across your data assets.

- How do you build a data product?

-

Building a successful data product begins with a clear business need, trusted data, and user-focused design. DataGalaxy simplifies this process by centralizing data knowledge, fostering collaboration, and ensuring data clarity at every step. To create scalable, value-driven data products with confidence, explore how DataGalaxy can help at www.datagalaxy.com.

- How do you improve data quality?

-

Improving data quality starts with clear standards for accuracy, completeness, consistency, and timeliness. It involves profiling, fixing anomalies, and setting up controls to prevent future issues. Ongoing collaboration across teams ensures reliable data at scale.

- How is value governance different than data governance?

-

Value governance focuses on maximizing business outcomes from data initiatives, ensuring investments align with strategic goals and deliver ROI. Data governance, on the other hand, centers on managing data quality, security, and compliance. While data governance builds trusted data foundations, value governance ensures those efforts translate into measurable business impact.

Key takeaways

- Data quality is a continuous effort, not a one-time fix.

- Strong governance, metadata management, and data catalogs are essential.

- Data quality rules must be co-created, iterative, and business-aligned.

- Proactive monitoring prevents small issues from becoming costly problems.

- DataGalaxy provides the most comprehensive solution for Data & AI Product Governance.